Abstract:

Paper:

|

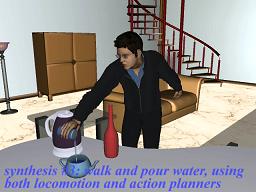

Planning Humanlike Actions in Blending Spaces Yazhou Huang, Mentar Mahmudi and Marcelo Kallmann IEEE/RSJ International Conference on Intelligent Robots and Systems San Francisco, CA, 2011 |

Video:

|

(5 MB .mp4) |

Bibtex:

@inproceedings { huang11iros, author = { Yazhou Huang and Mentar Mahmudi and Marcelo Kallmann }, title = { Planning Humanlike Actions in Blending Spaces }, booktitle = { Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS) }, year = { 2011 }, location = { San Francisco, California }, }

(for information on other projects, see our research and publications pages)