Abstract:

Paper:

|

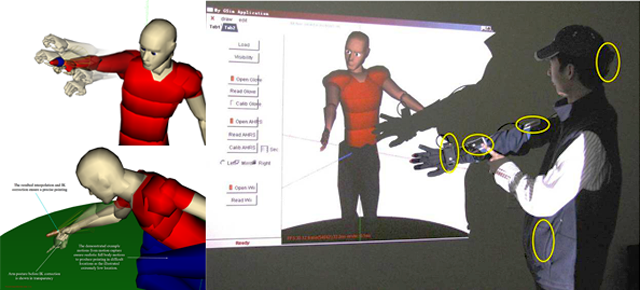

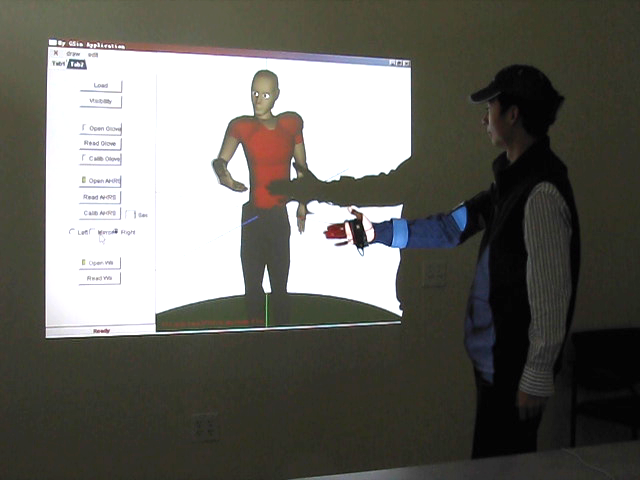

Interactive Demonstration of Pointing Gestures for Virtual Trainers Yazhou Huang and Marcelo Kallmann International Conference on Human-Computer Interaction (HCII) San Diego, CA, 2009 |

Video:

|

(15 MB .mov) |

Bibtex:

@inproceedings { huang09hcii, author = { Yazhou Huang and Marcelo Kallmann }, title = { Interactive Demonstration of Pointing Gestures for Virtual Trainers }, booktitle = { Human-Computer Interaction, Part II, Proceedings of HCI International 2009, LNCS }, year = { 2009 }, pages = { 178--187 }, location = { San Diego, California }, publisher = { Springer }, address = { Berlin } }

(for information on other projects, see our research and publications pages)