Abstract:

Paper:

|

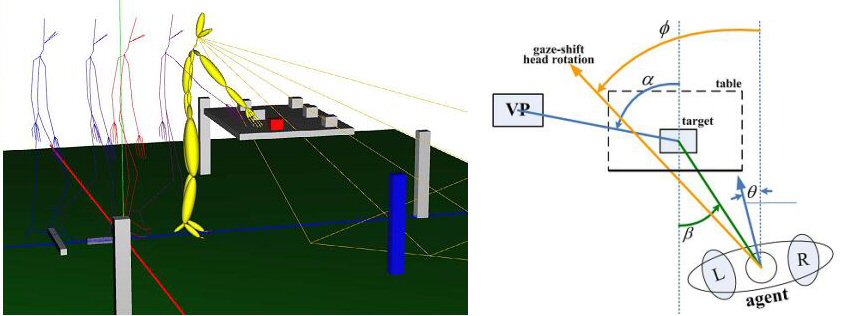

Modeling Gaze Behavior for Virtual Demonstrators Yazhou Huang, Marcelo Kallmann, Justin L Matthews and Teenie Matlock International Conference on Intelligent Virtual Agents Reykjavik, Iceland, 2011 (the original publication is available at www.springerlink.com) |

Bibtex:

@inproceedings { huang11iva, author = { Yazhou Huang and Marcelo Kallmann and Justin L Matthews and Teenie Matlock }, title = { Modeling Gaze Behavior for Virtual Demonstrators }, booktitle = { Proceedings of the 11th International Conference on Intelligent Virtual Agents (IVA) }, year = { 2011 }, location = { Reykjav\'ik, Iceland }, }

(for information on other projects, see our research and publications pages)